Contact Discovery

For most message platforms, usually the first thing users will do after creating an account is to start adding people to their contact list; a process commonly known as Contact Discovery (CD). In fact, for many platforms, adding someone to your contact list is a necessary prerequisite to be able to talk to them. This is especially true for end-to-end secure platforms, like Wickr. That’s because for these platforms, adding an account to your contact list also entails obtaining the initial cryptographic key material for the account; a critical step in establishing a secure communication channel.

Contact Discovery can be difficult though. After all, before I’m even able to message someone I already need to be able to come up with their account name so I can add it to my contact list. To help overcome this hurdle, many platforms allow users to piggyback on their external address book. So, if we are willing to forget about privacy for a moment, then here’s a “basic method” I use for bootstrapping CD: first, I simply upload my entire address book (e.g., the one stored on my phone or in my email client) to the messaging platform’s CD server. Next, the server goes through all the email addresses, phone numbers, names, and/or other personally identifying information. For each entry, it checks if it matches an existing account in the platform’s account database. For example, I might already have a phone number in my address book already associated with some existing account. Finally, it notifies me of any matches so I can add those to my contact list.

Secure Contact Discovery

Unfortunately, this basic approach has pretty glaring privacy issues as it requires users to reveal the entire contents of their address book to the platform’s CD server. Indeed, one of the greatest challenges when designing a CD mechanism is to build a system that provides the same functionality as this basic approach but that simultaneously also provides the following:

- The CD server should learn nothing about any entries in my address book that do not match an existing account.

- The process should provide some mechanism for the platform to limit the rate at which I can test for existing accounts.

- The process should require a reasonable amount of time only to complete, even on a device with limited CPU power, memory, bandwidth, or data transfer limits. Similarly, the server’s resource load needs to be kept within reason so that it doesn’t become a bottleneck during multiple concurrent CD sessions.

The first property is basically about protecting people’s metadata. Afterall, who I know can often be at least as critical data as what I am saying. If I’m part of a network of political activists operating under an oppressive regime, the mere fact that I know other activists may be incriminating enough to have serious consequences for me. To make matters worse, the basic method for performing CD actually leaks all users’ address books to the same central entity (namely the CD server), making this an extremely valuable target for compromise attacks by interested third parties.

The second property is really also about protecting users’ privacy, e.g. from de-anonymization attacks. Having an enforceable rate limit can significantly raise the cost and time required to perform such attacks. Moreover, it turns out that, in practice, this a real concern and the lack of such rate limits has been effectively used in attacks. For instance, the Rocket Kitty APT group used it to de-anonymize 15 million Iranian telegram users by rapidly enumerating all Iranian phone numbers using the Telegram CD API.

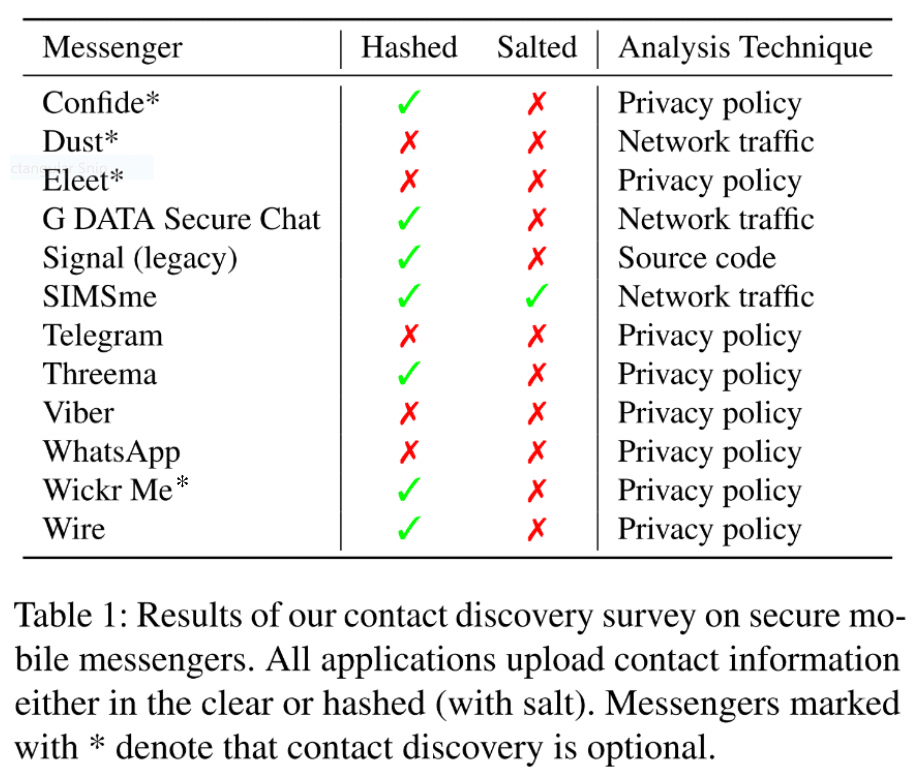

It’s worth noting that several major messaging platforms (e.g., WhatsApp, Telegram, and Viber) actually take exactly this approach (though hopefully by now all of them implement at least some form of rate limit).

Best Practice for CD

At this point, it’s worth pointing out that there are some pretty effective things the platform can do to take the edge off the privacy issues with CD. This is one thing in particular that Wickr Me really gets right. First, it gives users the explicit choice whether to upload their address book for CD. Second, and in my opinion most importantly, it lets people choose account names as arbitrary strings and does not require any further data to be associated with the account. So, if you don’t want people to be able to find your account, then you can choose to not tie it to any email address, phone number, real world name, or other information. I don’t mean that this information won’t be made public/searchable, rather, it’s just not registered in the system. Even Wickr doesn’t know anything about your account beyond the name you chose for it.

Having said that, these measures are surprisingly rare among messaging platforms (even those billed as offering “secure” messaging). Instead, most platforms will (publicly!) associate phone numbers and/or email addresses to accounts, often even directly using them as the account name. It’s also pretty common practice to automatically upload users’ address books in the background as soon as they create an account or add entries to their address book. So if people want to reveal this information to the platform, it’s left to them to try to use the OSes security features to block the apps access to their address book. Which is not really an ideal solution.

Some Better Designs

Of course, bootstrapping CD by checking against your address book is a very useful feature to have. So, it would be really great if we could build new mechanisms for bootstrapping CD that more closely resemble the “Ideal CD” described above.

One such improvement over the basic approach is for users to first mask the information they upload for CD using a cryptographic hash function (e.g., SHA256). That is, instead of uploading a phone number from their address book, they would upload the hash of each phone number. Similarly, the server first computes the hash of all the accounts in its user DB and then compares those with the uploaded hashes to see if there are any matches.

The idea is that telling someone the hash of string X doesn’t reveal anything about X other than giving the person a way to test if for some other string X = Y. So, the more uncertainty an attacker has about what data I’m uploading, the harder it would be for them to guess the data correctly. But if not actually guessing correctly, the hash should hide the data from the attacker. (At least beyond just letting them know that the data doesn’t match any of the failed guesses they’ve already made.) This is the approach taken by most secure messaging platforms, including Wickr Me. Table 1 presents a more complete survey of how different messaging platforms handle CD as found in ref. . (Note that mislabeled Wickr Me as not making CD optional, which I fixed here.)

This “hashing approach” does improve things compared to the basic approach. However, its effectiveness still depends on the capabilities and goals of the attacker (rather than, say, only depending on the security of some underlying cryptography). Generally, the less the attacker knows about the contact information being uploaded, the harder it will be for them to guess the correct value; i.e., the more work they will have to do to invert the hashes. Conversely, the more computational power and time the attacker has, the more guesses they can make. Still, we can raise the adversaries cost per guess by using an “expensive to compute” hash function, like those explored in my research on moderately hard computation. However, there is a limit to this type of defense as it also requires honest users to evaluate the expensive hash function on each chunk of data they want to upload.

An extension of this “hashed” method (currently being used by Signal) is for the server to do the comparisons of the uploaded hashes and the hashed account names inside a secure enclave (i.e., SGX). This way, before uploading the hashes, the user can encrypt them in such a way that only the server’s SGX enclave can decrypt them again (but not, say, the server’s OS or anything in between). Moreover, before the user uploads any data, it first runs a “remote attestation” protocol with the enclave in order to verify that the enclave is running the expected CD discover software (and nothing else). The idea here is to put an extra defense between an attacker controlling the server and the users’ uploaded data. That’s because even the server should not be able to read or write anything that happens inside the enclave.

So, does this type of system solve our problem? Well, unfortunately, in practice it turns that this is less true than we might hope for. Indeed, we’ve seen a steady rise in attacks on the security boundaries implemented by hardware (of which SGX is one example). For example, the recent Foreshadow attack abused speculative execution features in SGX to extract information (e.g., cryptographic key material) from an enclave. (See CVE-2018-3615 for more).

Another issue (for some at least) is that remote attestation (a central part of the entire process) essentially requires all parties to trust the server’s hardware producer, which, in the case of SGX, means Intel. But really, at the end of the day, my concern here is that the whole thing relies on what’s really a very strong assumption about the server’s hardware security and we want that assumption to hold true even if the hardware is directly taken under (even physical) control by an advanced, well-resourced, and persistent attacker. Given the enormous amounts of sensitive data passing through the CD server, it’s worth asking if we can do better.

Private Set Intersection

Cryptography is all about trying to get more security from weaker assumptions. So, let’s take a step back for a second and look at the general computational problem of which CD is a special case. Basically, we have two parties (i.e., a user and the server) who each have their own set of strings (i.e., address book data and the account DB, respectively). Their goal is for the user to learn the intersection of the two sets without either party revealing anything about the strings not in the intersection to the other party. In cryptography, this problem is well known as “Private Set Intersection” (PSI) and it is a long-standing topic of study within the wider field of secure Multiparty Computation (MPC). As a result, we actually already have a myriad of protocols for doing PSI. Unfortunately, the vast majority of these protocols have remained squarely in the realm of theory (at least at the scales and efficiency constraints for doing real-world CD in a large messaging platform, like Wickr Me). They typically need extreme amounts of bandwidth, computational, and consequently time to run.

However, recently things have been starting to reach a tipping point. This blog post was inspired by a recent project by some cryptography and security researchers at the Technical Universities of Graz, Austria, and Darmstadt, Germany. The results were just presented at USENIX 28 this August and they are, frankly speaking, an eye opener. Their work can be read in detail in ref.

Basically, the researchers pulled together a host of techniques from PSI and general MPC as well as a couple of new ones they devised themselves. Next they implemented an optimized version of their protocol and ran a bunch of benchmarks in quite realistic settings, measuring things like bandwidth and computation time for various pretty realistic parameter sets (e.g., a client address book size of up to 210 and a server DB size of up to 228). The results they reported were highly impressive. The next sections describe the benchmarks and their results.

PSI Protocol for CD

To understand the benchmarks, we first have to take a closer look at the new PSI protocol. At a high level, it consists of 4 phases.

- The “Base Phase” does not depend on the inputs (i.e., the contact info). Instead, it mainly just precomputes cryptographic material for use in the later stages. Its complexity is linear in terms of the maximum set size of the client; that is, the maximum number of entries from its address book that it wants to test against the account DB on the server. Of course, if later on that upper bound is reached, the client and server can always run another Base Phase, preparing more cryptographic material as needed.

- The “Setup Phase” involves a large amount of computation on the server side. This results in a data blob that is downloaded by any client that wants to do CD. Basically, the computational complexity is linear in terms of the server’s set size; i.e., it needs to perform a couple of (symmetric) cryptographic operations per existing account in the message platform. This results in an (extremely compressed) representation, specifically in the form of a Cuckoo filter, of all the accounts on the network. A Cuckoo filter is a data structure that represents a set of elements (e.g., strings). It allows for the three following operations to be performed very efficiently: a) checking if a string is included in the set, b) inserting a new string into the set, and c) removing a string from the set. However, so as not to leak too much information to clients when they download the filter, each account is first passed through a so-called pseudo-random function (PRF). Essentially, PRFs are deterministic keyed cryptographic functions mapping strings to 128-bit outputs. (Common practical examples include HMAC-SHA256 and AES256.) A basic security property of PRFs is that, if you don’t know the key, then the function will look like it’s just outputting uniform random strings for each new input. So intuitively, without knowing the PRF key, the outputs tell you nothing at all about the input. In this way, at the end of the Setup Phase, the server sends the populated Cuckoo filter to the client, yet the client still learns nothing about any of the existing account names since it can’t actually evaluate the PRF on its own. Crucially, the same Cuckoo filter (and thus the same Setup Phase) can be reused across any number of clients, thus amortizing the computational cost to the server.

- Next is the “Online Phase.” Basically, this is where the cryptographic material from the base phase is used to help the client and server run what’s called an “Oblivious PRF” (OPRF) evaluation protocol. This is a (one round trip) protocol, where the client input to the session is a string X (presumably some entry from its address book, like X = “persephone@hades.gov”) and the server input is the PRF key k it used in the Setup Phase. The protocol ensures that the server learns nothing at all about X, while the only thing the client learns is the output Y = PRF(k, X), i.e., the value of the PRF evaluated on its input string using the server’s key. Now, all that remains is for the client to check if Y is contained in the Cuckoo filter it got during the Setup Phase. If so, then it knows that X corresponds to an existing account in the network. Otherwise, when Y is not in the Cuckoo filter, then there is no account with the name X. Because the OPRF protocol hides the client’s inputs and this is the only time the client even communicates anything input dependent with the server, we can see that the server learns nothing at all about the contents of the client’s address book (other than an upper bound on its size). In other words, we get the first security property of our ideal CD system based only on the assumption that the underlying cryptography is sound. That is, we’ve avoided making any hardware security assumptions about the server. We’ve also avoided any need for placing limits on an attacker’s uncertainty about the uploaded data and about an attacker’s computational resources. Another nice feature is that it creates an easy way for the server to enforce rate limits on how many strings a client can check membership for. Recall that the client only learns Y but not, say, anything about the PRF key k. That means that for the next string X’ it wants to test, it will first have to run a new OPRF session with the server to learn PRF(k, X’). So, to enforce a rate limit, all the server needs do is limit the rate of OPRF sessions it runs with the client. (Of course, in practice you’d run your multiple sessions in parallel rather than sequentially to speed things up. But that won’t harm the server’s ability to limit the rate of sessions.)

- Now, in practice both the client’s address book and the server’s membership DB will change over time. For the client, that shouldn’t be a problem since it can always run further Online (and Base) Phases as it gets new address book entries. What’s nice though, is that changes to the membership DB only result in the need for further computation and bandwidth proportional to the size of the number of accounts being added and removed. That’s because Cuckoo filters also support deletion (in contrast to the more widely known Bloom filters). So, during an “Update Phase,” all the server really has to do is evaluate the PRF on the entries it wants to add/remove and then insert/delete the results into/from the Cuckoo set. Then, it simply sends over the difference between the old and new Cuckoo filter to the clients. It turns out here that, for a realistically sized change to the membership DB, this approach takes far less computation and bandwidth than re-computing and sending over the entire filter from scratch would.

At the level of generality described here, this approach to an (unbalanced) PSI is relatively well known. The actual technical optimizations used and invented in this work are well beyond the scope of this blog post. But for those interested, I’d suggest having a closer look at ref. First, it’s well written, making it accessible also for non-cryptographers, and second, if nothing else, it’s a pretty sweet testament to the power of collaborative open research binding together long threads of painstaking work developed by cryptographers in many institutions, countries, and over decades.

Benchmarking Data

Up to this point, it all sounds like a great story, but the part that really caught my eye and the reason for this post are the results from the benchmarking. We’ll go through the results for the first three phases, which are summarized in three tables in their work.

The Setup:

The researchers implemented 3 variants of their protocol (based on 3 different concrete PRFs) and measured each one’s performance. The first PRF was based on the AES block cipher, while the second was based on a block-cipher called LowMC. On the one hand, AES is probably the world’s most well-studied block cipher, giving us about as strong a confidence as we can hope for in its security (and by extension that of the PRF used in this work). However, LowMC was designed specifically to reduce the number of multiplications needed during encryption/decryption as this is often good for reducing the MPC protocol complexity. Finally, the third PRF was (an Elliptic Curve instantiation of) an older PRF called the Naor–Reingold PRF, or NR for short. The security of this is based on a pretty fundamental and well-studied math problem called the Discreet Logarithm problem (in elliptic curve groups).

Each implementation included an ARM optimized client, which they benchmarked using a Pixel XL 2 phone running a Snapdragon 835 SoC @ 2.45 GHz with 4 GiB of RAM. For their server implementation, they used an Intel-Core i7-4600 @ 2.6 GHz and 16 GiB of RAM. So, a pretty realistic setup (albeit, maybe a bit on the high end for a phone).

Then they ran a bunch of benchmarks where they mainly measured the time required for various operations. For network transmissions, they measured the time on a WiFi connection 230 Mbit/s up/download bandwidth and a 70 ms round trip time. They also measured the transmission times on an LTE connection, which had 42 Mbit/s download and 4 Mbit/s upload bandwidth with 80 ms RTT. Again, a bit on the high end but otherwise quite realistic.

They explored the protocols behavior for server-side account DB sizes in the range of Ns = 220 up to Ns = 228 entries. So maybe not quite as big as the very largest of global messaging platforms but pretty darn close already. Client side they benchmarked address books with between Nc = 1 up to Nc = 210 entries. It seems like a pretty fair assumption that few people will ever have more than 1,000 contacts in their address book (though one could argue that for each contact, one might want to check several different entries, like name, emails, and phone numbers). Still, 1000 does seem like a reasonable number to work with.

Finally, all the cryptographic primitives were instantiated at the 128-bit security level and the false positive rate for the Cuckoo filter was set to be at most 2-29.4.

The Results:

I’ve included a few of the relevant ables from ref. here so that we can pick them apart a bit. There’s way more in ref. though, so have a look there for more.

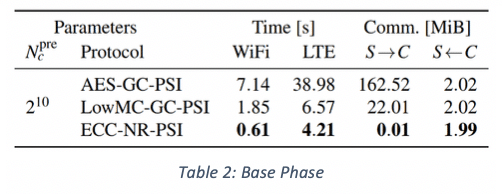

- The Base Phase benchmarking results are listed in Table 2. It looks like even in the worst case, i.e., with the slowest protocol for this phase (the one using AES) and when using the slowest network connection, this phase completed in under 40 sec. What’s more, the only time this phase would really impact the user’s experience is when it’s run right after joining. Although it can be run without any inputs from the client, realistically many clients will want to perform initial CD right after creating their account, which means they’ll have to wait for the Base Phase to complete before they can move on to the Online Phase. (In contrast building, the Cuckoo filter in the Setup Phase can be run by the server before a new user even creates their account, so from the user’s perspective this will most likely not impact their experience at all.) Any subsequent Base Phases can be quietly run in the background pre-emptively as they require no knowledge of the client’s address book. That way, in the future, when the user adds more entries to their address book, the required cryptographic material will be all ready to go.

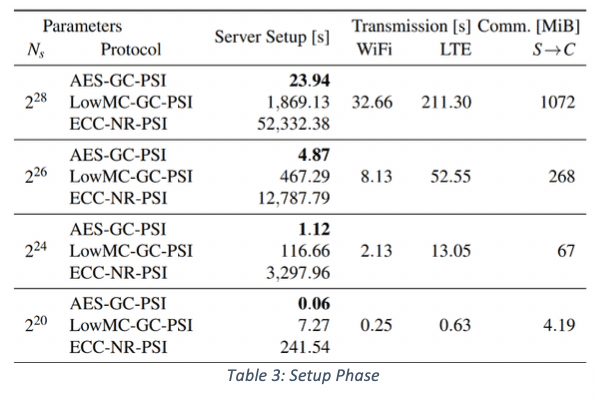

- The Setup Phase is the most time consuming of any of the phases. However, the good news is that the most time-consuming part (namely the evaluations of the PRF and populating of the Cuckoo filter) is simply the local computation performed by the server so it can take place before any interaction with new users. Moreover, the resulting filter can be reused across any number of users. So, although in the worst case this could take around 15 hours, it seems that even for the largest messaging systems this step is again not really prohibitively expensive. Now, realistically it would probably be prudent to periodically choose a fresh PRF key and to rerun the Setup Phase from scratch to build a completely new Cuckoo filter. This would probably help mitigate some types of collaborative rate limit circumvention or other. But really, it’s just a matter of good practice to design cryptographic systems in such a way that the key material is rotated/updated as often as is practical. After all, secrets tend to eventually leak, so at least give them an expiry date whenever possible. Probably one of the biggest issues with the benchmark results for the whole protocol is that for a large enough number of accounts in the DB, the Cuckoo filter will end up being in the order of a Gigabyte large. Now that’s not impossible to store on your average cellphone, but it’s not exactly ideal either. Of course, the client could always delete the filter after the current CD session is done, but then they’d need to download it again whenever they want to start a new one (e.g., because they just entered a new entry into their address book), so you’d probably end up wanting to store it locally anyway. Ultimately, this is going to significantly increase the footprint of the app on the device. Though as devices grow in power, this will become less and less of an issue. And to be fair, my most heavily used messaging apps already have several GiB big footprints on my devices. But, the real issue with a large filter is not the local client-side storage, but the cost of transferring a GiB from the server to the client over a cell phone network. For many users, downloading a GiB using their data plans will be prohibitively expensive, meaning they won’t be able to do (their first) CD until they have a broadband connection available. Once that’s taken care of though, future CD could take place over the phone networks again using the cached filter since now all that would be left to do is to run the much more efficient Online Phase.

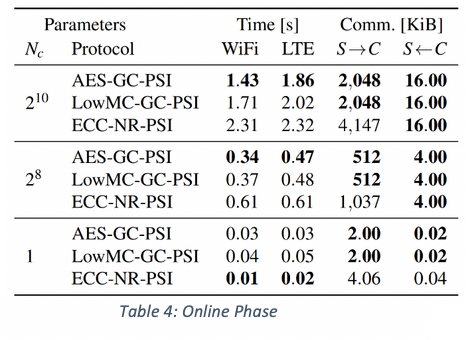

- Recall that the Online Phase consists of an Oblivious PRF evaluation per contact entry and is followed by the client checking locally if the result is already in their Cuckoo filter. Thus, this process is pretty much independent of the size of the server’s account DB, which is why that parameter (Ns) is not listed in Table 4. The good news here is that the Online Phase looks to be very light weight; e.g., even for an address book with 1,000 entries, the slowest protocol didn’t need more than 2.5 seconds over an LTE connection. All the more so because the data transfer consists overwhelmingly of data from the server to the client, which lines up nicely with the asymmetry in the vast majority of phone connections’ upload/download speeds. The light weight of this phase is especially important because unlike other (more time-consuming) steps in the process (like computing as well as downloading the initial Cuckoo filter), the Online Phase cannot be amortized across different look ups.

Conclusions

At the end of the day, the practicability of these new protocols for CD lies in the eye of the beholder and will vary from case to case. It will also depend on various things, like how much value is given to users’ privacy vs. usability; is this a system that will be used primarily by PCs with a broadband connection or should this work on old phones with limited data plans and bandwidth?; how important is it for users to be able to immediately do CD even over phone networks with small data plans?; and how long, and under which conditions, are we willing to have users wait for CD?

Moreover, there is still a fair amount of engineering that needs to be done for this system to really be production ready. Implementing this sort of security sensitive cryptographic software at production quality levels involves a host of resource-intensive aspects: things like having a constant time code for each OS and device class that’s supported and auditing the code for vulnerabilities and other bugs. All of this is made many times more difficult than usual as, under the hood, this protocol uses some pretty advanced cryptographic tools that are not widely implemented yet.

Still, for me at least, the main takeaway here is that we’re really a lot closer to getting this stuff out into the world than I had realized. After all, while most of the PSI literature in the past has appeared at purely cryptographic and even theory conferences, this publication was presented at USENIX, which is primarily a security conference grounded in real-world applications.

References

Mobile Private Contact Discovery – Daniel Kales, Christian Rechberger, Thomas Schneider, Matthias Senker, Christian Weinert. Appearing at the 28th USENIX Security Symposium. August 14–16, 2019. Santa Clara, CA, USA. Available at: https://encrypto.de/papers/KRSSW19.pdf

Reuters – Exclusive: Hackers accessed Telegram messaging accounts in Iran – Joseph Menn, Yeganeh Torbati https://www.reuters.com/article/us-iran-cyber-telegram-exclusive-idUSKCN10D1AM